Problem

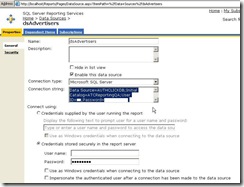

Our company has hundreds of SQL Server Reporting Services reports with daily, weekly, monthly, quarterly, yearly and adhoc schedules with various rendering formats like web archive, excel, pdf, csv, xml and tiff sent to multiple users, departments and groups. Sometimes we have to manually run a subscription to resend reports on an adhoc basis or re-send the reports when the actual subscriptions failed or when the data had to be corrected.

In this tip I will show you an easy way to identify the scheduled Reporting Services report, so you can run that scheduled job to reproduce the reports and delivery of the reports.

Solution

SQL Server Reporting services has come a long way from the first time it was released for SQL Server 2000 SP4. Unfortunately there are still some rough corners where it could be improved and one such place is subscriptions.

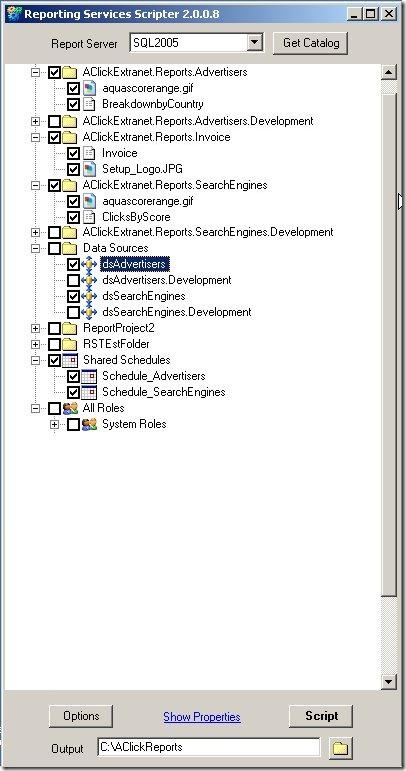

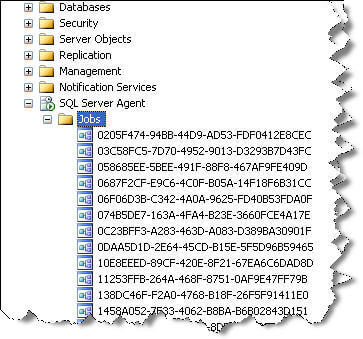

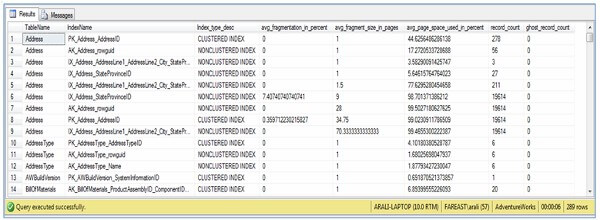

Manually initiating a subscription is a tedious task in SQL Server Reporting Services. Each subscription in Reporting Services is setup as a SQL Server Agent job, but the job names are NOT intuitive, rather named as a Unique Identifier as shown below.

Here is a screenshot of some of the subscriptions on a Reporting Services instance.

Fortunately, SQL Server Reporting Services comes with a rich set of meta-data that is stored in tables and for today's tip we will take advantage of these tables. There are two different methods to accomplish this task and both of them are outlined below.

Option 1:

This method looks at the dbo.ReportSchedule, dbo.Subscriptions, dbo.Catalog tables of the ReportServer database along with the dbo.sysjobs table of the msdb to figure out the SQL Agent job name. Additional information is also pulled to isolate the correct report when a similarly named report is in multiple folders/paths.

SELECT

b.name AS JobName

, e.name

, e.path

, d.description

, a.SubscriptionID

, laststatus

, eventtype

, LastRunTime

, date_created

, date_modified

FROM ReportServer.dbo.ReportSchedule a JOIN msdb.dbo.sysjobs b

ON a.ScheduleID = b.name

JOIN ReportServer.dbo.ReportSchedule c

ON b.name = c.ScheduleID

JOIN ReportServer.dbo.Subscriptions d

ON c.SubscriptionID = d.SubscriptionID

JOIN ReportServer.dbo.Catalog e

ON d.report_oid = e.itemid

WHERE e.name = 'Sales_Report'

|

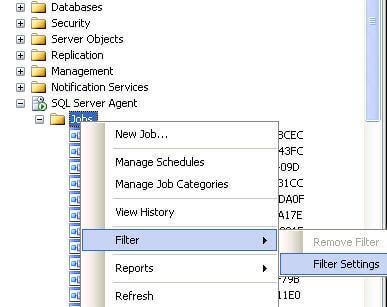

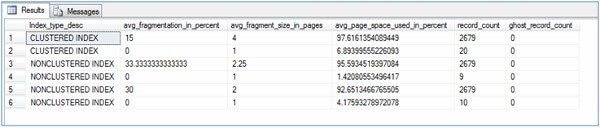

From the above resultset, grab the uniqueidentifier for the JobName column and filter the job in SSMS as shown below. Once the correct job is identified, run the SQL Server Agent job to deliver the subscription.

Option 2:

This option is better than the first option and completely eliminates the manual step in Option 1 of having to find and run the SQL Agent job.

In this option, we will generate the T-SQL that is used inside the SQL Agent job directly from the tables and then just run the SQL. It's a pure SQL based solution.

After you run this query, get the column that has the EXEC command for the report you want to re-run and paste the EXEC command into a query window to execute the code to re-run the report.

SELECT

'EXEC ReportServer.dbo.AddEvent @EventType=''TimedSubscription'', @EventData='''

+ CAST(a.SubscriptionID AS VARCHAR(40)) + '''' AS ReportCommand

, b.name AS JobName

, a.SubscriptionID

, e.name

, e.path

, d.description

, laststatus

, eventtype

, LastRunTime

, date_created

, date_modified

FROM ReportServer.dbo.ReportSchedule a JOIN msdb.dbo.sysjobs b

ON a.ScheduleID = b.name

JOIN ReportServer.dbo.ReportSchedule c

ON b.name = c.ScheduleID

JOIN ReportServer.dbo.Subscriptions d

ON c.SubscriptionID = d.SubscriptionID

JOIN ReportServer.dbo.Catalog e

ON d.report_oid = e.itemid

WHERE e.name = 'Sales_Report'

|